Concatenating objects

concat函数(在主pandas命名空间中)执行沿轴执行连接操作的所有繁重工作,同时执行索引(如果有)的可选集逻辑(联合或交集)轴。注意,我说“如果有”,因为对于Series只有一个可能的级联轴。

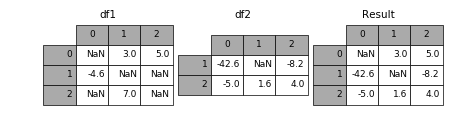

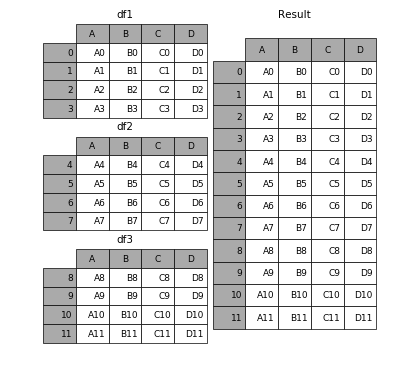

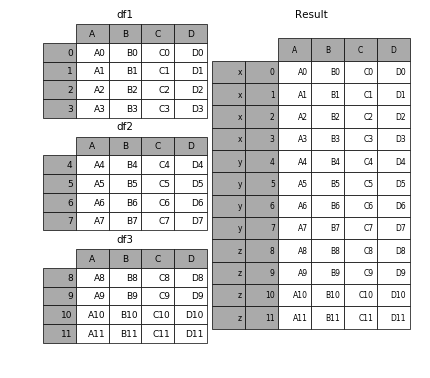

在介绍concat的所有细节以及它能做什么之前,这里有一个简单的例子:

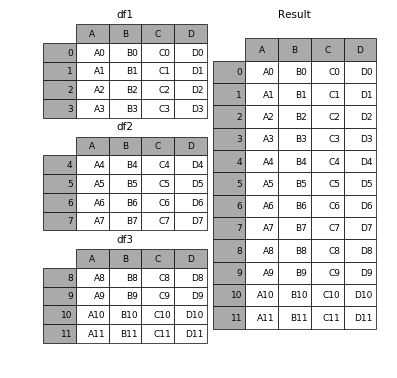

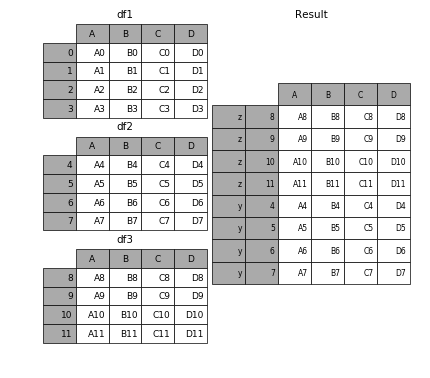

In [1]: df1 = pd.DataFrame({'A': ['A0', 'A1', 'A2', 'A3'],

...: 'B': ['B0', 'B1', 'B2', 'B3'],

...: 'C': ['C0', 'C1', 'C2', 'C3'],

...: 'D': ['D0', 'D1', 'D2', 'D3']},

...: index=[0, 1, 2, 3])

...:

In [2]: df2 = pd.DataFrame({'A': ['A4', 'A5', 'A6', 'A7'],

...: 'B': ['B4', 'B5', 'B6', 'B7'],

...: 'C': ['C4', 'C5', 'C6', 'C7'],

...: 'D': ['D4', 'D5', 'D6', 'D7']},

...: index=[4, 5, 6, 7])

...:

In [3]: df3 = pd.DataFrame({'A': ['A8', 'A9', 'A10', 'A11'],

...: 'B': ['B8', 'B9', 'B10', 'B11'],

...: 'C': ['C8', 'C9', 'C10', 'C11'],

...: 'D': ['D8', 'D9', 'D10', 'D11']},

...: index=[8, 9, 10, 11])

...:

In [4]: frames = [df1, df2, df3]

In [5]: result = pd.concat(frames)

像它在ndarrays上的同级函数一样,numpy.concatenate,pandas.concat接受同类型对象的列表或dict,并将它们与“与其他轴“:

pd.concat(objs, axis=0, join='outer', join_axes=None, ignore_index=False,

keys=None, levels=None, names=None, verify_integrity=False,

copy=True)

objs:Series,DataFrame或Panel对象的序列或映射。如果传递了dict,则排序的键将用作键参数,除非它被传递,在这种情况下,将选择值(见下文)。任何无对象将被静默删除,除非它们都是无,在这种情况下将引发一个ValueError。axis:{0,1,...},默认为0。沿着连接的轴。join:{'inner','outer'},默认为“outer”。如何处理其他轴上的索引。outer为联合和inner为交集。ignore_index:boolean,default False。如果为True,请不要使用并置轴上的索引值。结果轴将被标记为0,...,n-1。如果要连接其中并置轴没有有意义的索引信息的对象,这将非常有用。注意,其他轴上的索引值在连接中仍然受到尊重。join_axes:Index对象列表。用于其他n-1轴的特定索引,而不是执行内部/外部设置逻辑。keys:序列,默认值无。使用传递的键作为最外层构建层次索引。如果为多索引,应该使用元组。levels:序列列表,默认值无。用于构建MultiIndex的特定级别(唯一值)。否则,它们将从键推断。names:list,default无。结果层次索引中的级别的名称。verify_integrity:boolean,default False。检查新连接的轴是否包含重复项。这相对于实际的数据串联可能是非常昂贵的。copy:boolean,default True。如果为False,请勿不必要地复制数据。

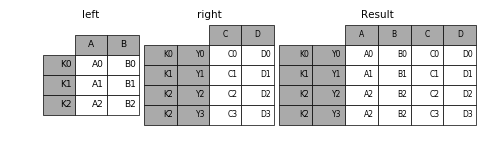

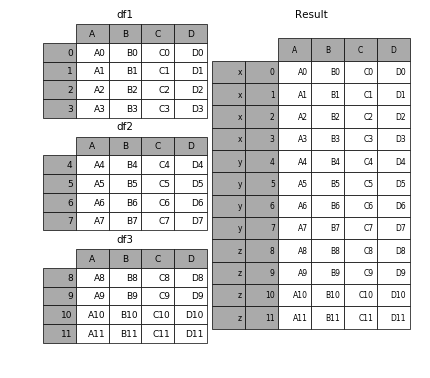

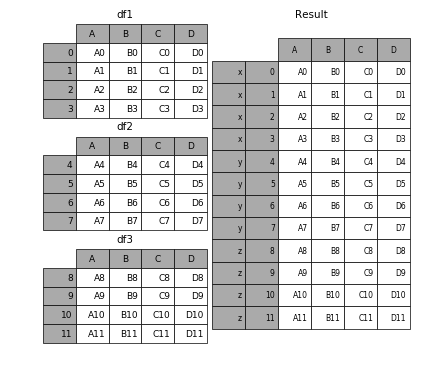

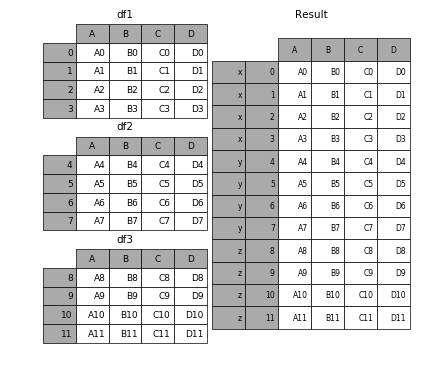

没有一点点上下文和例子许多这些参数没有多大意义。让我们来看上面的例子。假设我们想要将特定的键与每一个被切碎的DataFrame关联起来。我们可以使用keys参数:

In [6]: result = pd.concat(frames, keys=['x', 'y', 'z'])

正如你可以看到的(如果你已经阅读了文档的其余部分),结果对象的索引具有hierarchical index。这意味着我们现在可以做的东西,像通过键选择每个块:

In [7]: result.ix['y']

Out[7]:

A B C D

4 A4 B4 C4 D4

5 A5 B5 C5 D5

6 A6 B6 C6 D6

7 A7 B7 C7 D7

这不是一个伸展,看看这可以非常有用。有关此功能的更多详细信息。

注意

然而,值得注意的是,concat(因此append)会创建数据的完整副本,并且不断重用此函数可能会产生重大的性能损失。如果需要使用对多个数据集的操作,请使用列表推导。

frames = [ process_your_file(f) for f in files ]

result = pd.concat(frames)

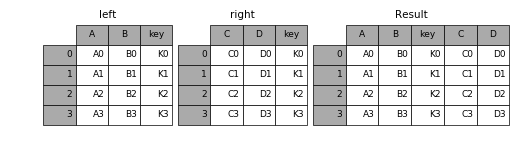

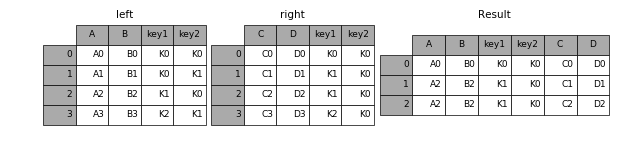

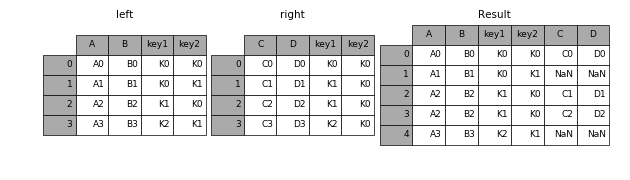

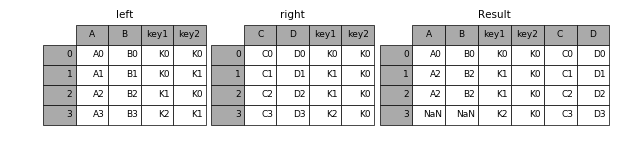

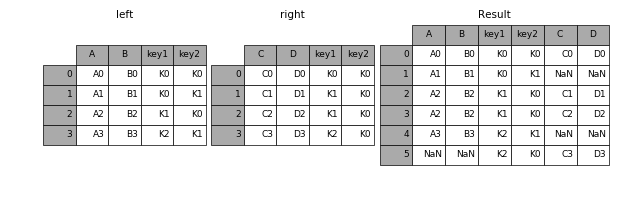

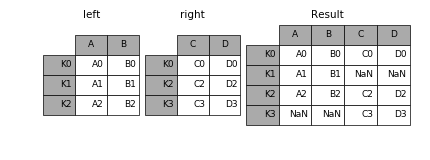

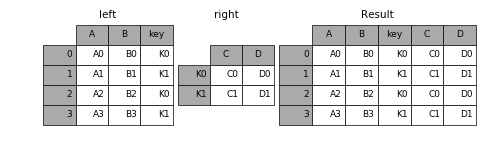

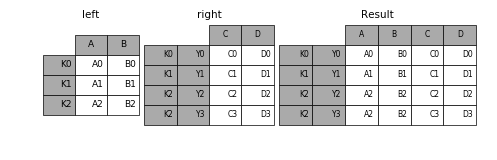

Set logic on the other axes

例如,当将多个DataFrames(或面板或...)粘合在一起时,您可以选择如何处理其他轴(不是并置的轴)。这可以通过三种方式完成:

- 取它们的(排序)并集,

join='outer'。这是默认选项,因为它导致零信息丢失。 - 以交叉点

join='inner'。 - 使用特定索引(在DataFrame的情况下)或索引(在Panel或未来更高维度的对象的情况下),即

join_axes参数

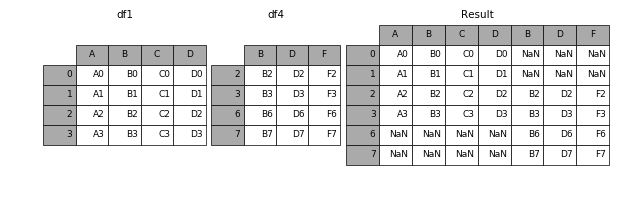

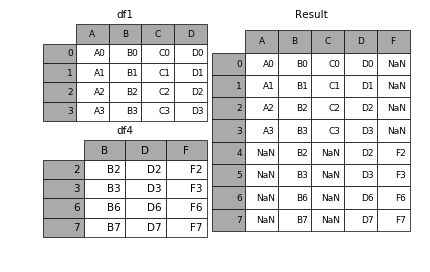

这里是每个这些方法的示例。首先,默认的join='outer'行为:

In [8]: df4 = pd.DataFrame({'B': ['B2', 'B3', 'B6', 'B7'],

...: 'D': ['D2', 'D3', 'D6', 'D7'],

...: 'F': ['F2', 'F3', 'F6', 'F7']},

...: index=[2, 3, 6, 7])

...:

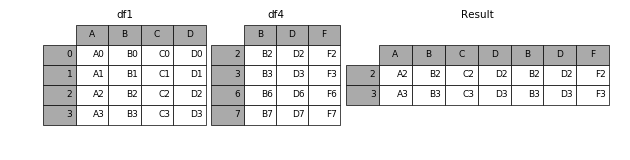

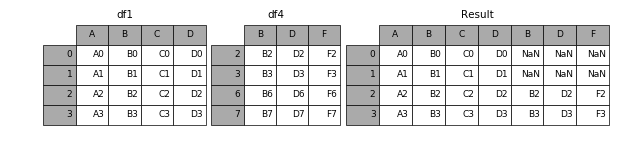

In [9]: result = pd.concat([df1, df4], axis=1)

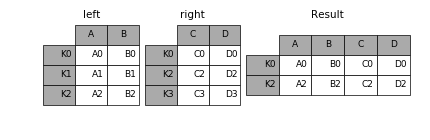

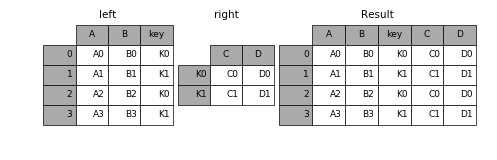

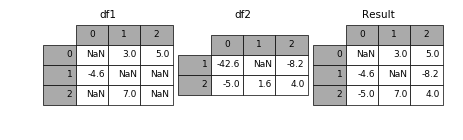

注意,行索引已经被组合和排序。这与join='inner'是一样的:

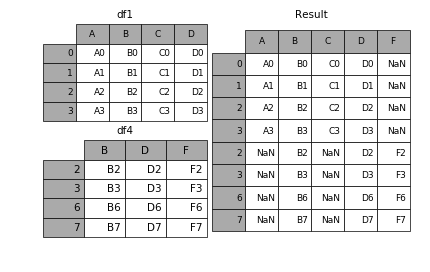

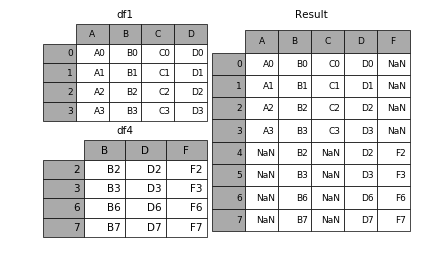

In [10]: result = pd.concat([df1, df4], axis=1, join='inner')

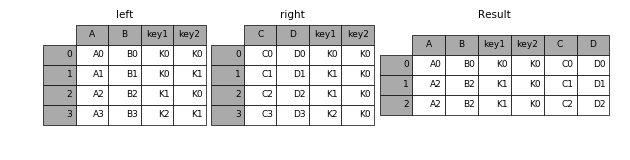

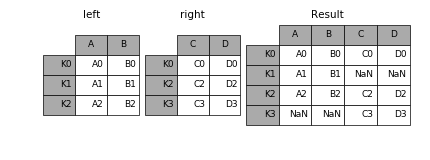

最后,假设我们只想从原始DataFrame重用确切索引:

In [11]: result = pd.concat([df1, df4], axis=1, join_axes=[df1.index])

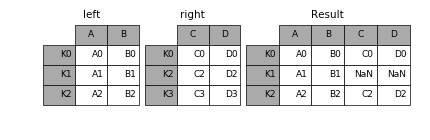

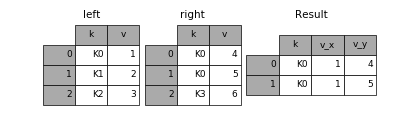

Concatenating using append

对concat有用的快捷方式是Series和DataFrame上的append实例方法。这些方法实际上早于concat。它们沿axis=0连接,即索引:

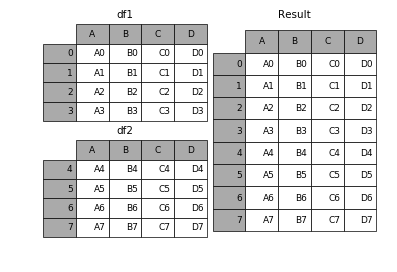

In [12]: result = df1.append(df2)

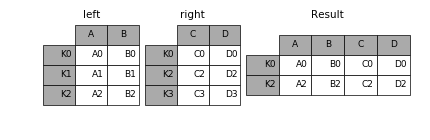

在DataFrame的情况下,索引必须是不相交的,但列不需要是:

In [13]: result = df1.append(df4)

append可能需要多个对象进行连接:

In [14]: result = df1.append([df2, df3])

注意

与不附加到原始列表并不返回任何内容的list.append方法不同,append 不会修改df1并返回其附带df2的副本。

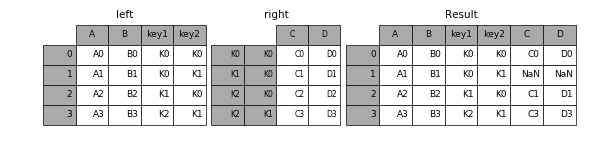

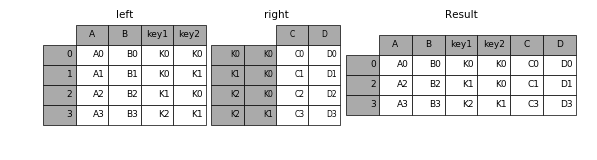

Ignoring indexes on the concatenation axis

对于没有有意义索引的DataFrames,您可能希望附加它们,并忽略它们可能具有重叠索引的事实:

为此,请使用ignore_index参数:

In [15]: result = pd.concat([df1, df4], ignore_index=True)

这也是DataFrame.append的有效参数:

In [16]: result = df1.append(df4, ignore_index=True)

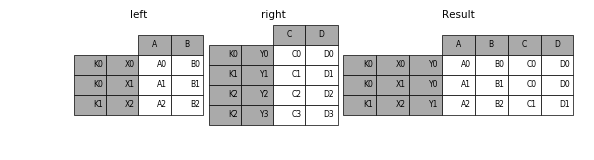

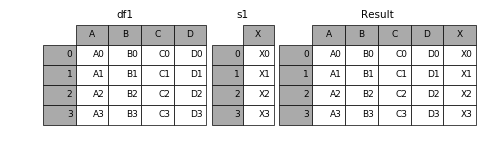

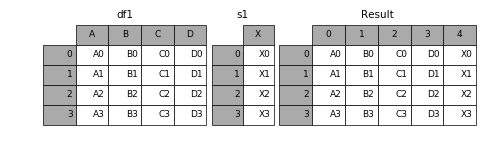

Concatenating with mixed ndims

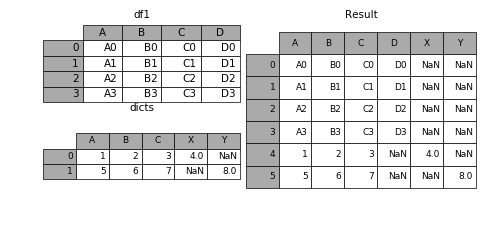

您可以连接Series和DataFrames的混合。该系列将被转换为DataFrames,列名称为Series的名称。

In [17]: s1 = pd.Series(['X0', 'X1', 'X2', 'X3'], name='X')

In [18]: result = pd.concat([df1, s1], axis=1)

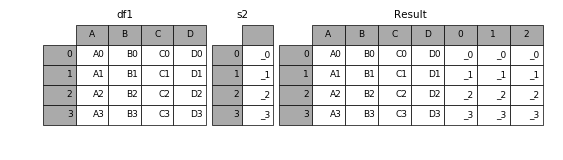

如果未命名的系列通过,它们将被连续编号。

In [19]: s2 = pd.Series(['_0', '_1', '_2', '_3'])

In [20]: result = pd.concat([df1, s2, s2, s2], axis=1)

传递ignore_index=True将删除所有名称引用。

In [21]: result = pd.concat([df1, s1], axis=1, ignore_index=True)

More concatenating with group keys

keys参数的常见用法是在基于现有系列创建新的DataFrame时覆盖列名。请注意默认行为是如何让结果DataFrame继承父系列名称(如果存在)。

In [22]: s3 = pd.Series([0, 1, 2, 3], name='foo')

In [23]: s4 = pd.Series([0, 1, 2, 3])

In [24]: s5 = pd.Series([0, 1, 4, 5])

In [25]: pd.concat([s3, s4, s5], axis=1)

Out[25]:

foo 0 1

0 0 0 0

1 1 1 1

2 2 2 4

3 3 3 5

通过keys参数,我们可以覆盖现有的列名。

In [26]: pd.concat([s3, s4, s5], axis=1, keys=['red','blue','yellow'])

Out[26]:

red blue yellow

0 0 0 0

1 1 1 1

2 2 2 4

3 3 3 5

让我们现在考虑一个变化的第一个例子:

In [27]: result = pd.concat(frames, keys=['x', 'y', 'z'])

您还可以将dict传递到concat,在这种情况下,dict键将用于keys参数(除非指定了其他键):

In [28]: pieces = {'x': df1, 'y': df2, 'z': df3}

In [29]: result = pd.concat(pieces)

In [30]: result = pd.concat(pieces, keys=['z', 'y'])

创建的MultiIndex具有根据传递的键和DataFrame段的索引构建的级别:

In [31]: result.index.levels

Out[31]: FrozenList([[u'z', u'y'], [4, 5, 6, 7, 8, 9, 10, 11]])

如果您想指定其他级别(偶尔会这样),您可以使用levels参数:

In [32]: result = pd.concat(pieces, keys=['x', 'y', 'z'],

....: levels=[['z', 'y', 'x', 'w']],

....: names=['group_key'])

....:

In [33]: result.index.levels

Out[33]: FrozenList([[u'z', u'y', u'x', u'w'], [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11]])

是的,这是相当深奥,但实际上是实现像GroupBy,其中分类变量的顺序是有意义的。

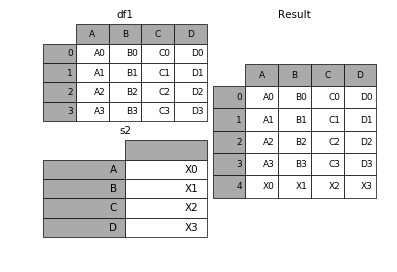

Appending rows to a DataFrame

虽然不是特别有效(因为必须创建一个新的对象),你可以通过传递一个Series或dict到append,它返回一个新的DataFrame如上所示,附加一行到DataFrame。

In [34]: s2 = pd.Series(['X0', 'X1', 'X2', 'X3'], index=['A', 'B', 'C', 'D'])

In [35]: result = df1.append(s2, ignore_index=True)

您应该使用ignore_index与此方法指示DataFrame丢弃其索引。如果希望保留索引,应该构造一个适当索引的DataFrame,并附加或连接这些对象。

您还可以传递一个列表或系列:

In [36]: dicts = [{'A': 1, 'B': 2, 'C': 3, 'X': 4},

....: {'A': 5, 'B': 6, 'C': 7, 'Y': 8}]

....:

In [37]: result = df1.append(dicts, ignore_index=True)